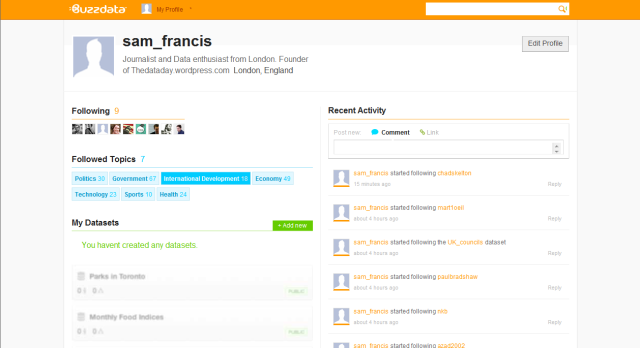

Earlier this month data journalism got it’s very own a social network with the launch of Toronto-based website BuzzData.

Users of this new service can upload data, visualisations, articles and background on a topic or story for other users to ‘follow’ and pore over themselves.

The thought of sharing secretive scoops and hard-fought for data would cause a prickly sweat to form on the back of most journalists necks.

But this is by no means the first attempt to connect journalists working with data sets (Socrata, Paul Bradshaw’s Help Me Investigate, and to a lesser extent the public nature of Many Eyes).

We at TheDataDay think this new, more community focussed attempt could be a major step towards breaking down the silos of information that build up amongst journalist.

How does Buzz Data Work?

By way of an explanation of their mission BuzzData quoted the words of Antoine de Saint-Exupery:

If you want to build a ship, don’t drum up people together to collect wood and don’t assign them tasks and work, but rather, teach them to long for the endless immensity of the sea.

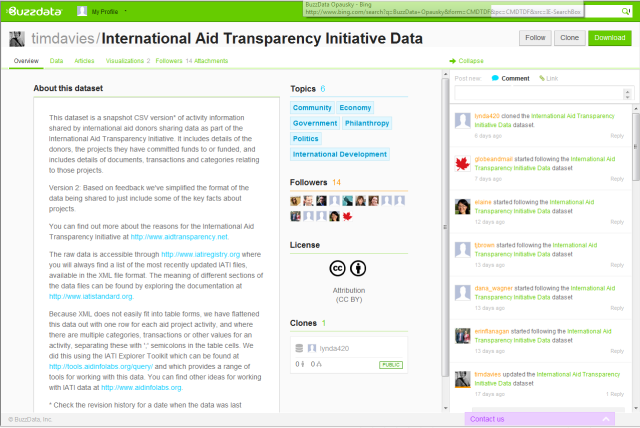

Once signed up users upload data sets and any related articles, data visualisations, and any background documentation on a topic or story.

Each data set is given its own profile which allows users to build up a conversation around the data, either by leaving comments or linking and adding their own relevant information to the mix – progressively, or so the idea goes, adding more context.

Publishing the information publicly allows anyone to clone and download the raw data, but BuzzData also allows you to upload sets into closed networks (allowing some of the traditional journalistic smoke and mirrors to remain).

How will BuzzData help us data journalists?

BuzzData hope that their site will one day be a place where not only journalists, but policy makers can come together in and innovate.

In an interview with Journalism.co.uk Mark Opausky, CEO of BuzzData said:

“[BuzzData] allows the story to live on and in some cases spin out other more interesting stories. The journalists themselves never know where this data is going to go and what someone on the other side of the world might do with it.”

According to BuzzData’s own blog :

“Our goal is to create a place where users — whether they’re individuals, news agencies, science labs, governments — have the power to publish, build, revise and expand existing data into information that’s more current, accurate, accessible and ultimately useful than any version of data they might create alone.

Social functionality and easy dataset publishing is just Stage 1 of BuzzData’s ultimate vision. We really hope you’re enjoying it. Stay tuned, because there’s a lot more in store for you.”

And with over $1 million of investment already secured we at TheDataDay think that it might not be a bad idea to get in at the ground floor of this venture.

Users can sign up here. Let us know your experiences in the comment section below.